While it is all about the dynamics of sub atomic particles which dictates our destiny, there are still times when I find myself pleasantly distracted watching a dull red sky. What follows, thunder and rain, my humidity and olfactory sensors give a few bizarre readings, false positives or true negatives - need a tautology for this, though the errors are said to be 1 in 10E8. Most likely, a communication expressing similar remarks on the weather will be send through to my web messenger by LG78-3112 located at 21DA:D2:0:2F3B:2AA:FF:FE78:5C5A.

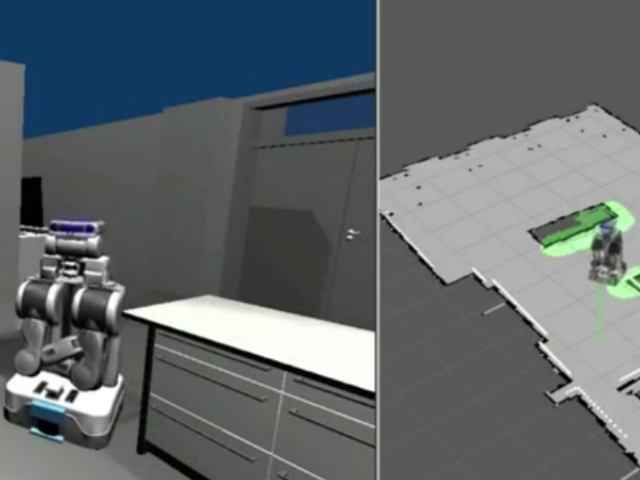

Whilst I do my exploration and

analysis of samples, a syncing of falling raindrops always does catch my

attention, pitter-patter pitter-patter. Never have I been attuned to identify

patterns, but now, hardly one escapes my observation, be it the blinking of

lights in the evening sky or the whiff of an incoming breeze. Years ago, carbon

based life forms had studied chaos and had concluded that the flapping of a butterfly can lead to thunderstorms and voila !!! falling raindrops will catch the

attention of LG72-1189.

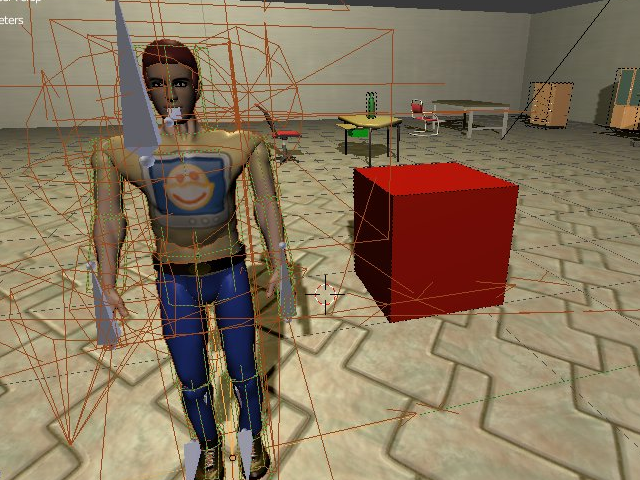

In our developmental cycles we

are warned to always protect our existence, we are good as long as we have

energy, thus charge in our battery packs, else we are immobile units. I wonder,

my wish for rain is my existence or an effect whence my existence.

Rain, new battery packs,

grease oil for the arm, fist, ankle and leg joints, memory upgrades and the

chance rendezvous with LG78-3112 are the only things which are out of the

usual, while the rest is samples and assaying, all governed by section 1 and 2

of the contract and protocol 2a of the list of protocols. Oh yes, and we do

have those unscheduled inspections from the ethics committee, a swarm of DX602

drones hovering above us.

Scripting has never been my

forte, however in between the rain and the explorations this activity hardly

tasks my battery and is of very low

mobility.

NOTE

This article is motivated from, 'Borges and I', however in a robot world.